Most transmission providers and grid operators have experience using complex calculations to calculate wind capacity credit which flew under the renewable developer’s radar. Now, transmission organizations are adopting the same calculation for solar and storage. Additionally, others who didn’t use this method before are picking up this calculation due to the amount of solar in their interconnection queue. With the threat of blackouts and brownouts, and increasing penetrations of solar and storage in capacity forecasts, these calculations are increasingly important for renewable developers to understand and voice their opinion.

There are two industry-accepted methods that grid operators are pursuing. Only the average method is favorable for renewable developers because the marginal method discounts renewable capacity contributions and works in favor of thermal resources.

Effective Load Carrying Capability (ELCC)

When wind penetration was 1,000 MW, and forecasts indicated 10,000 MW in 10 years due to state Renewable Portfolio Standards (RPS), grid operators had to find a way to calculate how much capacity value they should assign for wind. This need arose when wind integration studies were done at most transmission organizations. The wind industry quickly accepted a calculation that worked with existing Loss of Load Expectation (LOLE) models because LOLE models were run to determine the planning reserve margin, where capacity credit for the wind was needed at first. Until then, there was no need for calculating capacity credit because it was widely accepted that thermal resources would get 100% because they showed up to perform during peak demand hours. Thermal resources outage statistics under peak demand were captured under a different metric, “Effective Forced Outage Rate at peak demand” EFORd.

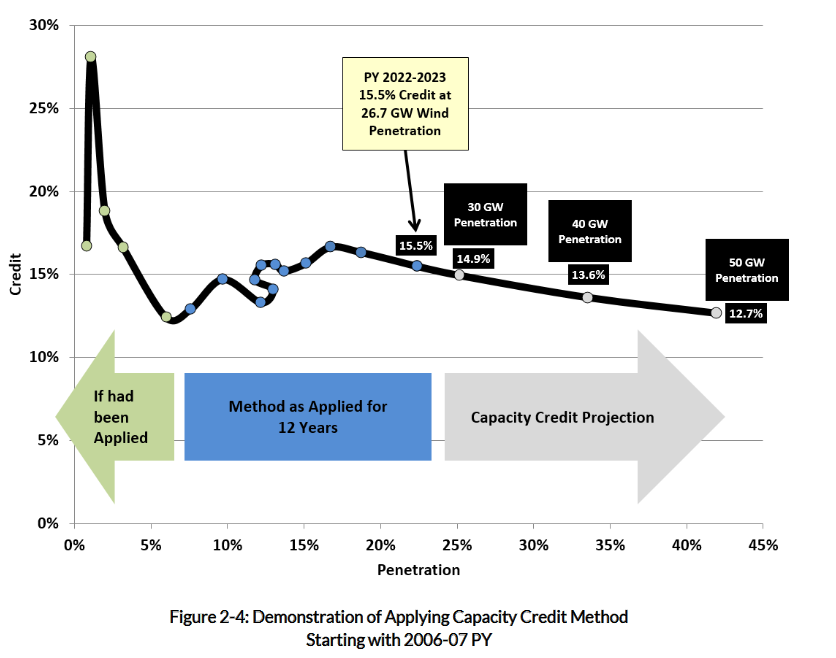

For wind, the Effective Load Carrying Capability or ELCC calculation method depended heavily on the number of historical peak load hours. Its main job was to determine the value of wind capacity if the wind was available during those top eight peak demand hours. As the Midcontinent Independent System Operator (MISO) Wind and Solar Capacity Credit report states, “Tracking the top eight daily peak hours in a year is sufficient to capture the peak load times that contribute to the annual LOLE of 0.1 days/year”.

The initial set of ELCC values for wind penetration at 10,000 MW was high in the 40% range. Hence the capacity contribution for 10,000 MW of wind was determined at 4,000 MW. As more wind was added to the grid (a total of 25,000 MW), this value fell to 15% because more wind resources were available to meet the top eight peak hours meant better reliability – less chance of blackout or brownout conditions.

Average ELCC or Marginal ELCC?

Let us consider solar and storage in this ELCC world because some states have announced goals to be 100% carbon-free electricity (by 2040 in New York, by 2045 in California, and by 2050 in Wisconsin). Since it is common knowledge that the capacity credit falls as we increase wind penetration, renewable developers want to avoid a similar situation with solar. Hence energy storage is added at the point of interconnection to prop up the capacity value of solar. These interconnection requests are called hybrid interconnections, mostly solar+storage but could also include wind plus storage and other forms of renewables with storage.

Calculating ELCC for solar alone and solar+storage clearly shows storage capacity benefit to the grid operator. Where capacity markets exist, the grid operators must run these ELCC calculations to determine capacity credit for all renewable resources. Since most states lack the engineers to run these complex calculations, they apply the grid operator’s capacity credit calculation to renewable resources in their Integrated Resource Plan (IRP) proceedings.

With solar and storage in the mix, ELCC is now discussed in at least 2 ways – an average ELCC (MISO’s wind capacity credit) and a marginal ELCC (California IRP). An average ELCC looks at averaging ELCC values calculated at each of the 8 peak demand hours. A marginal ELCC, on the other hand, is looking at the next MW that the resource can provide to meet that 1 day in 10 reliability standard because that MW could come from either a thermal or non-thermal resource. Both have their uses, but the grid operators seem to be focused on adopting marginal ELCC to ensure reliability because they are convinced that with more renewables on the grid, there is a need for more dispatchable capacity.

Interestingly, renewable advocates are showing data where thermal resources like natural gas units have issues even when assigned 100% capacity value, such as frozen gas compressor stations and pipeline unavailability, in addition to their regular operational maintenance issues. Typically, utilities exclude all supply constraints in a category called “Outside Management Control” OMC cause code in the NERC Generating Availability Data System (GADS) database, which resulted in better outage statistics (XEFORd), which meant a higher unforced capacity value for those thermal resources.

Average ELCC works best

What works for grid operators does not work for renewable developers. The reason for storage at most of these solar interconnections is to maintain the capacity value of solar. Suppose solar production hours are not syncing with peak demand hours, which is typically the case; using a battery made sense to charge when peak solar production occurs and discharge when peak demand occurs. This storage ensures solar serves the grid during peak demand hours, not a thermal resource which is the main goal behind those states’ plans to achieve carbon-free electricity. This storage investment is why a marginal ELCC method does not work for renewable developers because as more renewables are added to the grid, the capacity value falls to zero, whereas in an average ELCC, the decrease in capacity value is gradual and does not fall to 0%.

Additionally, the grid operators have convinced themselves that duration-limited resources must be discounted in this ELCC calculation for capacity purposes. A battery is limited by the number of hours it can discharge; hence it is “duration limited.” It also doesn’t help small renewable developers when grid operators and other transmission providers bury them in acronyms and complex LOLE calculations.

Conclusion

Transmission providers and grid operators must be technology agnostic and should not care about the technology that provides capacity as long as it performs when needed. If renewable developers are swimming in complex ELCC calculations to assess their resource capacity contributions, it is only fair for thermal resources and their utility owners to look through this ELCC lens. During the events leading up to load shed or blackout conditions – every MW counts. Whether that MW comes from thermal or non-thermal on the supply or demand side should not matter to the balancing authority.